Compared to prior studies that have merely abstracted the layout into a list of elements and generated all the element positions in one go, this novel approach has at least two advantages. The first stage predicts representations for regions, and the second stage fills in the detailed position for each element within the region based on the predicted representations. Specifically, Variational Autoencoder (VAE) is leveraged as the overall architecture and decompose the decoding process into two stages. This work seeks to improve the performance of layout generation by incorporating into the generation process the concept of regions, which consist of a smaller number of elements and appear like a simple layout. Table 1: Comparison of DDG-DA with other methods in different scenarios Coarse-to-Fine Generative Modeling for Graphic LayoutsĪlthough graphic layout generation has recently attracted growing attention, it is still challenging to synthesize realistic and diverse layouts due to complicated element relationships and varied element arrangements. The model trained on the datasets generated by DDG-DA would better accommodate future data distribution/concept drift.Īs shown in Table 1, experiments were performed on three real-world prediction tasks and multiple models and obtained significant improvements over previous methods.

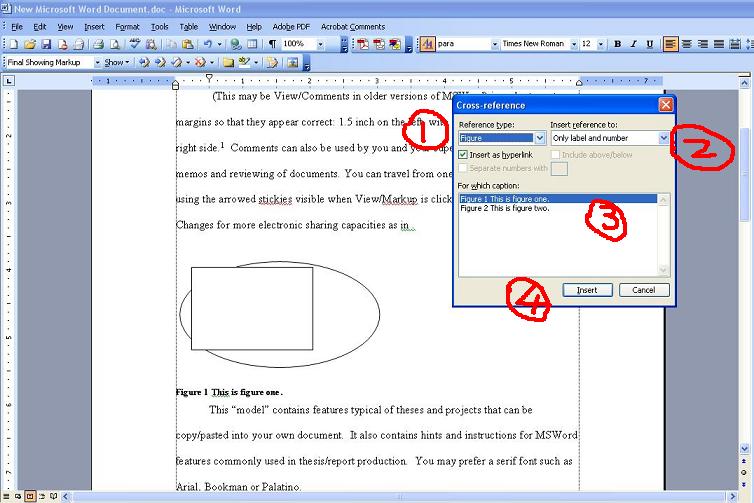

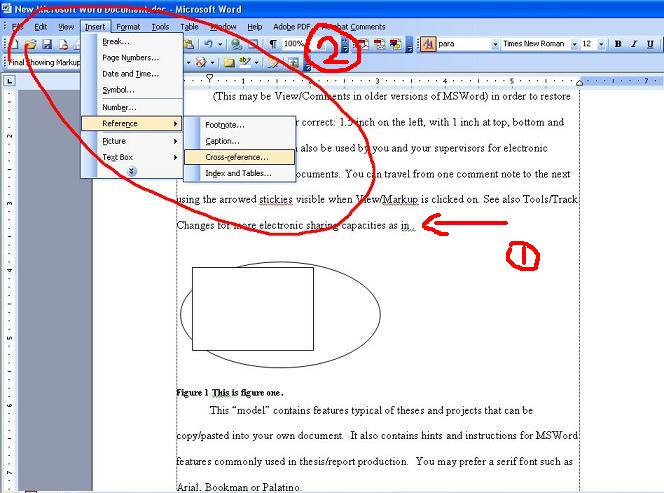

#MICROSOFT WORD CROSS REFERENCE FIGURE SHOWING UP HOW TO#

In the learning stage, DDG-DA first learns how to resample data on historical time series data, and then in the prediction stage, it periodically generates training datasets by resampling historical data. The distance function in this work is differentiable, so it can be used to efficiently learn the parameters of DDG-DA to minimize the error of the predicted data distribution. In addition, researchers designed a distribution distance function equivalent to KL-divergence to calculate the distance between the predicted data distribution and future data distribution. It resamples historical data with weights to generate a new training dataset, which would serve as the prediction for future data distribution. DDG-DA aims to narrow this distribution gap. However, there is a distribution gap between the historical data and the future data, which negatively affects the predictive performance of the learned forecasting models. Specifically, as shown in Figure 1, time series data are collected over time, and algorithms use the collected historical data to learn or adjust the forecasting models for prediction in the future. This paper proposes a new method, DDG-DA, to predict future data distribution and to use that distribution in generating a new training dataset to learn the forecasting model in order to adapt to concept drift, ultimately improving forecasting model performance.įigure 1: DDG-DA learns to generate new training dataset through weighted resampling to minimize the distribution gap between historical training data and future data. Predictable Concept Drift), which makes it possible to predict future concept drift instead of just adapting the model to the most recent data distribution.

However, in many practical scenarios, the changes of the environment form predictable patterns (a.k.a. To address this issue, researchers from previous work sought to detect whether concept drift has already occurred and then adapted the forecasting models to the most recent data distribution. This phenomenon is called “concept drift,” and it negatively affects the performance of forecasting models trained on historical data. In time-series data, the data distribution often changes over time due to instability of the environment, which is often hard to predict. DDG-DA: Data Distribution Generation for Predictable Concept Drift Adaptation More than 10 papers from Microsoft Research Asia have been selected, and they cover many fields in Artificial Intelligence, including concept drift, graphic layout generation, fake news detection, video object segmentation, cross-lingual language model pre-training, text summarization, attention mechanism, continuous-depth neural networks, domain generalization, online influence maximization and so on. This year’s AAAI conference was held from February 22 to March 1. AAAI is one of the top academic conferences in the field of Artificial Intelligence and is hosted by the Association for the Advance of Artificial Intelligence.

0 kommentar(er)

0 kommentar(er)